An EU funding experiment: Can OpenAI's GPT4 write a proper grant application?

I have never seen anyone openly talk about the ins and outs of the grant application process. Nor have I heard about anyone using GPT4 to write an application and analyse the results, so let's change that. Experiments are fun.

If you are a startup founder, an evaluator of grant applications or anyone working with startups, this article will give you valuable information. I hope you get some insights that you will use to better the startup ecosystem. Otherwise Elona Musk will put you on the "naughty list" and you really don't want that.

TL;DR (for those who hate suspense)

- Application submitted: April 6, 2023

- Evaluation received: June 30, 2023

- Time needed to apply: around 8 hours, including coffee breaks and reading the requirements

- How long it took to get the evaluation: almost 3 months

- Requirements documentation to be read: 40 pages in total

- Application to be filled: 10 pages

- AI/human work ratio: 80/20

- Results: scored above the threshold but didn’t make it to the next round

- Value of the experiment: priceless as it was beta testing of an early prototype

- Get the MVP of the "Funding applications assistant" : waitlist here

Context

We (i.e. a team of crazy startup founders) have been applying for different grants for several years and have experience with different types of applications (national and EU-level). Success rate is alright, but seeing as nobody shares their results, it's hard to say where we stand. Seeing as I still don't have a yacht, the success rate can't be that great, right?

Oh yeah, before your eye starts twitching, I have a weird sense of humor, so read on if you can take a joke. Moreover, even though I call myself MeanCEO, I mean well (I warned you about my humor) and all the seemingly negative things that I will highlight in this article are here to serve a purpose: initiate positive change for startups when it comes to grant application and evaluation process, because currently it is highly inefficient.

Anyways,

it was early April and we just got exclusive access to GPT4 as early developers for OpenAI (yes, I'm proud of that) and got a lot of credits to play with. I have been imploding with ideas that I wanted to test and of course, writing a funding application with the help of AI was on the top of my long geeky list. Writing applications is a tedious process that I utterly hate (in part because as an educator I consider the process to be outdated, inefficient, closed off, unfair towards both applicants and evaluators).

Before you ask, I did try to give my feedback but nobody seems to care.

And yes, I am sure there are better ways to decide who to fund that are quicker, more reliable and less painful. And I will eventually build this system myself if nobody wants to do it. But this article is not about that.

Back to the story: I took a look at my list of open calls and chose one simply because it was closing in a few days and the application process seemed easy enough, which is ideal for the first beta test. I didn't really want to participate in the call for real, but the topic itself is not new to me (our Live Certificates project is rather similar to what GPT4 offered to apply with) and I know enough to check if AI is writing something that makes sense or not.

NB. Never use GPT to work with topics you have no idea about

Oh yeah, before your eye starts twitching, I have a weird sense of humor, so read on if you can take a joke. Moreover, even though I call myself MeanCEO, I mean well (I warned you about my humor) and all the seemingly negative things that I will highlight in this article are here to serve a purpose: initiate positive change for startups when it comes to grant application and evaluation process, because currently it is highly inefficient.

Anyways,

it was early April and we just got exclusive access to GPT4 as early developers for OpenAI (yes, I'm proud of that) and got a lot of credits to play with. I have been imploding with ideas that I wanted to test and of course, writing a funding application with the help of AI was on the top of my long geeky list. Writing applications is a tedious process that I utterly hate (in part because as an educator I consider the process to be outdated, inefficient, closed off, unfair towards both applicants and evaluators).

Before you ask, I did try to give my feedback but nobody seems to care.

And yes, I am sure there are better ways to decide who to fund that are quicker, more reliable and less painful. And I will eventually build this system myself if nobody wants to do it. But this article is not about that.

Back to the story: I took a look at my list of open calls and chose one simply because it was closing in a few days and the application process seemed easy enough, which is ideal for the first beta test. I didn't really want to participate in the call for real, but the topic itself is not new to me (our Live Certificates project is rather similar to what GPT4 offered to apply with) and I know enough to check if AI is writing something that makes sense or not.

NB. Never use GPT to work with topics you have no idea about

Read the complete application at the end of this article and get on the waitlist to be the first to try the "Funding application assistant" MVP.

So off to the OpenAI playground I go…

Let's try to make it as concise as possible:

Human workload:

GPT4 did everything else, INCLUDING coming up with the idea, which I don't recommend if you want to actually get the grant. LLMs (Large Language Models) are not that creative by default, so you won't score high on the innovation criterion as I will show you later.

When I started filling in the application, I had no particular idea in mind because I wanted GPT to do as much as it is capable of. This means that I couldn't expect it to get real market data or do the revenue projections, as it's a language model and not an accounting model. I did, however, indicate my preference for one of the application tracks: education.

This very application that was part of the experiment is one of those where people behind it care more about the tech than the business case. Allocating only half a page (out of the maximum 10 pages for the whole application) to discuss the business model and market projections supports my point of view quite well.

Moreover, the two evaluators gave very different opinions about how GPT and I approached the business model description. When one said that “a business model is properly described”, the other one claimed that “the business model……not clearly described”. This of course can be explained by different backgrounds of the evaluators, but what's the value of this evaluation for the applicant? None in this case as I have no idea who the evaluators are or what exactly wasn't clear.

This is one of the biggest problems that I see with this kind of anonymous evaluation process. I see a lot of potential for AI in this domain. I have been perfecting GPT4 for evaluations and feedback for several months and we're beta testing several tools with hundreds of users. So far I'm quite happy with what I'm seeing.

Long story short, when GPT was ready with drafting the application I immediately knew that it would be considered too light on the tech. I didn't want to spend extra time on figuring out the architecture that had to be used so I accepted the risk.

It's one of those calls where the consortium previously built a foundation layer and is eager to get startups to build on top of that layer via cascade funding. I don't see the value of that very architecture (no offence to the people behind it, it's just my personal opinion) and I have no desire to build something that has (in my opinion) zero chance of mass adoption.

All other parts of the application were written rather well in the style that evaluators eat up (GPT wrote an ode to impact, described the process of dissemination for the sake of accountability and other things that EU requires) which made me think that GPT4 must have been trained on a lot of bureaucratic data.

Human workload:

- reading the requirements (this can be automated but I didn’t want to spend time on doing it back then)

- writing the prompt (including the requirements from the previous document and information about the team/company/previous experience)

- creating a pretty Gantt chart in Canva out of the AI-provided data (this can be automated with a bit of effort)

- googling and adding some market data and revenue projections to the business potential part (this can be automated now that GPT has access to the internet)

- reading the draft, editing a bit and making sure it fits the 10 page limit (this cannot be automated as QA is exactly where a human is needed)

GPT4 did everything else, INCLUDING coming up with the idea, which I don't recommend if you want to actually get the grant. LLMs (Large Language Models) are not that creative by default, so you won't score high on the innovation criterion as I will show you later.

When I started filling in the application, I had no particular idea in mind because I wanted GPT to do as much as it is capable of. This means that I couldn't expect it to get real market data or do the revenue projections, as it's a language model and not an accounting model. I did, however, indicate my preference for one of the application tracks: education.

This very application that was part of the experiment is one of those where people behind it care more about the tech than the business case. Allocating only half a page (out of the maximum 10 pages for the whole application) to discuss the business model and market projections supports my point of view quite well.

Moreover, the two evaluators gave very different opinions about how GPT and I approached the business model description. When one said that “a business model is properly described”, the other one claimed that “the business model……not clearly described”. This of course can be explained by different backgrounds of the evaluators, but what's the value of this evaluation for the applicant? None in this case as I have no idea who the evaluators are or what exactly wasn't clear.

This is one of the biggest problems that I see with this kind of anonymous evaluation process. I see a lot of potential for AI in this domain. I have been perfecting GPT4 for evaluations and feedback for several months and we're beta testing several tools with hundreds of users. So far I'm quite happy with what I'm seeing.

Long story short, when GPT was ready with drafting the application I immediately knew that it would be considered too light on the tech. I didn't want to spend extra time on figuring out the architecture that had to be used so I accepted the risk.

It's one of those calls where the consortium previously built a foundation layer and is eager to get startups to build on top of that layer via cascade funding. I don't see the value of that very architecture (no offence to the people behind it, it's just my personal opinion) and I have no desire to build something that has (in my opinion) zero chance of mass adoption.

All other parts of the application were written rather well in the style that evaluators eat up (GPT wrote an ode to impact, described the process of dissemination for the sake of accountability and other things that EU requires) which made me think that GPT4 must have been trained on a lot of bureaucratic data.

Evaluation results

Tada! Here's the juiciest part: a few pages of the evaluation report.

Total score: 36.5 (threshold: 30/50)

Seeing as it’s the first test, getting enough points to get above the threshold is a win in my book.

Why didn't it make it to the next round? They must have had more applicants with scores higher that this and they hit the quota. That's just a guess because I don't think they disclose how they actually choose among those who got above the threshold.

Let's go through the 3 parts of the evaluation:

Each part contains several sentences that highlight the strong points and a similar amount of sentences that discuss weak points. There are two evaluators but you don't really know who writes what unless you analyse their language and way of writing, which I always do as a linguist is always a linguist.

I will remove references to the call itself from the evaluation report and substitute them with XXX.

I will add my comments in brackets and highlight them with light purple to differentiate my words from the evaluators').

Total score: 36.5 (threshold: 30/50)

Seeing as it’s the first test, getting enough points to get above the threshold is a win in my book.

Why didn't it make it to the next round? They must have had more applicants with scores higher that this and they hit the quota. That's just a guess because I don't think they disclose how they actually choose among those who got above the threshold.

Let's go through the 3 parts of the evaluation:

- excellence and innovation;

- expected impact and value for money;

- project implementation

Each part contains several sentences that highlight the strong points and a similar amount of sentences that discuss weak points. There are two evaluators but you don't really know who writes what unless you analyse their language and way of writing, which I always do as a linguist is always a linguist.

I will remove references to the call itself from the evaluation report and substitute them with XXX.

I will add my comments in brackets and highlight them with light purple to differentiate my words from the evaluators').

Excellence and innovation: 3.5 (threshold 4).

This is what is being evaluated here:

- Clarity, pertinence, soundness of the proposed solution in the XXX context and credibility of the proposed methodology including the user centric approach

- Extent that the proposed work is beyond the state of the art and demonstrate innovation potential in relation to XXX objective

- Excellence/Capacity of the applicant to deliver the proposed solution

Strengths:

Weaknesses:

My thoughts:

It is a surprise to see a rather high score (even though it's a bit below the threshold here) seeing as it’s a 100% AI-generated idea (moreover, it’s the first attempt) constructed from parts of the requirements document. I looked through the document and fed parts of it to AI. It feels like AI gave the evaluators almost exactly what they wanted, but didn’t blow their minds with innovation.

- Clarity, pertinence, soundness of the proposed solution in the XXX context and credibility of the proposed methodology including the user centric approach

- Extent that the proposed work is beyond the state of the art and demonstrate innovation potential in relation to XXX objective

- Excellence/Capacity of the applicant to deliver the proposed solution

Strengths:

- The project vision is clearly presented. The intention to validate the user centric digital identity management system for online education is well described.

- The intention to test a new model to identify and validate on a large scale a new decentralised, user-centric digital identity management system for online education is well presented.

- A pilot with adequate size is planned, which is commendable.

- The team has previous experience to implement the technological part.

- The solution complies with the principles of user-centric solution design in that it envisages the implementation of a pilot of adequate size with a target market structure.

- The objectives should favour mass adoption of the service, also thanks to validation with a wide audience of users.

- Previous experience and the projects presented justify well the company capacity.

Weaknesses:

- The proposal does not describe well the requirements for the system. (This is what I mentioned about the application being light on the tech itself, so no surprise here. GPT didn't do the best job here.)

- The target to reach TRL7 is not sufficiently justified, bearing in mind the lack of expertise in the users' domain. (I have no idea what "the user's domain". Moreover, the question in the application was "Explain the maturity of your product/prototype and the expected maturity at the end of the project (current and expected Technology Readiness Level), so if they needed the applicant to justify it, that's exactly the word they should have used instead of "explain". GPT did everything correctly here.)

- The need for a decentralised, user-centric digital identity management system for online education is not presented in sufficient detail, for instance what are the problems to be solved. (What exactly is sufficient details? GPT doesn't understand what sufficient is. Take a look at the application at the end of this article and judge for yourself.)

- The partner is involved with unclear agreement exists between the proposer and the experimenter. (This is one of those "you didn't ask, so I didn't tell" situations that happen too often in applications when an evaluator needs clarification but the applicant can't give it because the evaluator can't ask for it.)

My thoughts:

It is a surprise to see a rather high score (even though it's a bit below the threshold here) seeing as it’s a 100% AI-generated idea (moreover, it’s the first attempt) constructed from parts of the requirements document. I looked through the document and fed parts of it to AI. It feels like AI gave the evaluators almost exactly what they wanted, but didn’t blow their minds with innovation.

We have a FREE STARTUP SCHOOL with AI FOR STARTUPS MODULE run by our very own Elona Musk, the AI co-founder.

Sign up for the startup school and get started immediately.

Expected impact and value for money: 3.5 (threshold 3)

This is what is being evaluated here:

- Contribution to XXX overall goal to create a portfolio of XXX protocols and ecosystem of decentralised identity management software solutions that is transparent to the users, interoperable, privacy aware and regulatory compliant.

- Impact of the proposed innovation on the needs of the European and global markets.

Strengths:

Weaknesses:

My thoughts:

Overall, GPT scored above the threshold here. The feedback in this part of the evaluation was rather confusing, as the evaluators provided very different views. Moreover, as it often happens, they expected to see something in the application that wasn't clearly asked, and seeing as neither GPT nor I are mind readers, we failed to provide details that could have been easily provided if only the questions in the application were more detailed.

- Contribution to XXX overall goal to create a portfolio of XXX protocols and ecosystem of decentralised identity management software solutions that is transparent to the users, interoperable, privacy aware and regulatory compliant.

- Impact of the proposed innovation on the needs of the European and global markets.

Strengths:

- The alignment with the overall project and call objectives, in particular with a specific focus on secure, user-centric digital identity management systems for educational micro-credentials, trust-based frameworks through verifiable credentials and decentralised reputation systems, ensuring trustworthiness and regulatory compliance, addressing the needs of non-technical communities, is well presented.

- A business model is properly described. The dissemination plan is appropriate to the project objectives and consistent with the planned activities.

- The development of a vision for the entire European industry of the sector, and the approach based on security, privacy and control of the data transferred in the process, is appropriate to qualify as a builder of a good practice to be shared and replicated.

- In addition to a good description of the business model, of the reference scenario and of the possible industrial impact, the proposer presented a dissemination plan appropriate to the project objectives and consistent with the actions it intends to put into practice.

Weaknesses:

- The added value of a decentralised identity system in the specific reference sector is not clearly identified. (This was already mentioned in the previous part.)

- The properties of the solution are not fully compared with other existing digital wallets and initiatives for microcredentials. In addition, the use of the digital identity is not properly described in the given context. It is particularly unclear how usability aspects will be demonstrated. (Once again, the details of the solution were not described in detail by GPT, so GPT's culpa.)

- The business model and scalability strategy, the KPIs to be achieved and the current proof of traction are not clearly described. (GPT satisfied one evaluator but not the other one.)

- The dissemination plan does not sufficiently include KPIs project such as number of posts and type of content, interaction KPIs, qualitative results in terms of new leads. (Number of posts, that's a funny one. Is there a KPI that's more useless than the number of posts in the era of AI-generated content? I don't recall KPIs being required with respect to the dissemination plan (by the way, dissemination is what normal people call digital marketing), therefore, not GPT's fault.)

My thoughts:

Overall, GPT scored above the threshold here. The feedback in this part of the evaluation was rather confusing, as the evaluators provided very different views. Moreover, as it often happens, they expected to see something in the application that wasn't clearly asked, and seeing as neither GPT nor I are mind readers, we failed to provide details that could have been easily provided if only the questions in the application were more detailed.

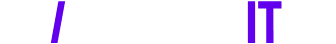

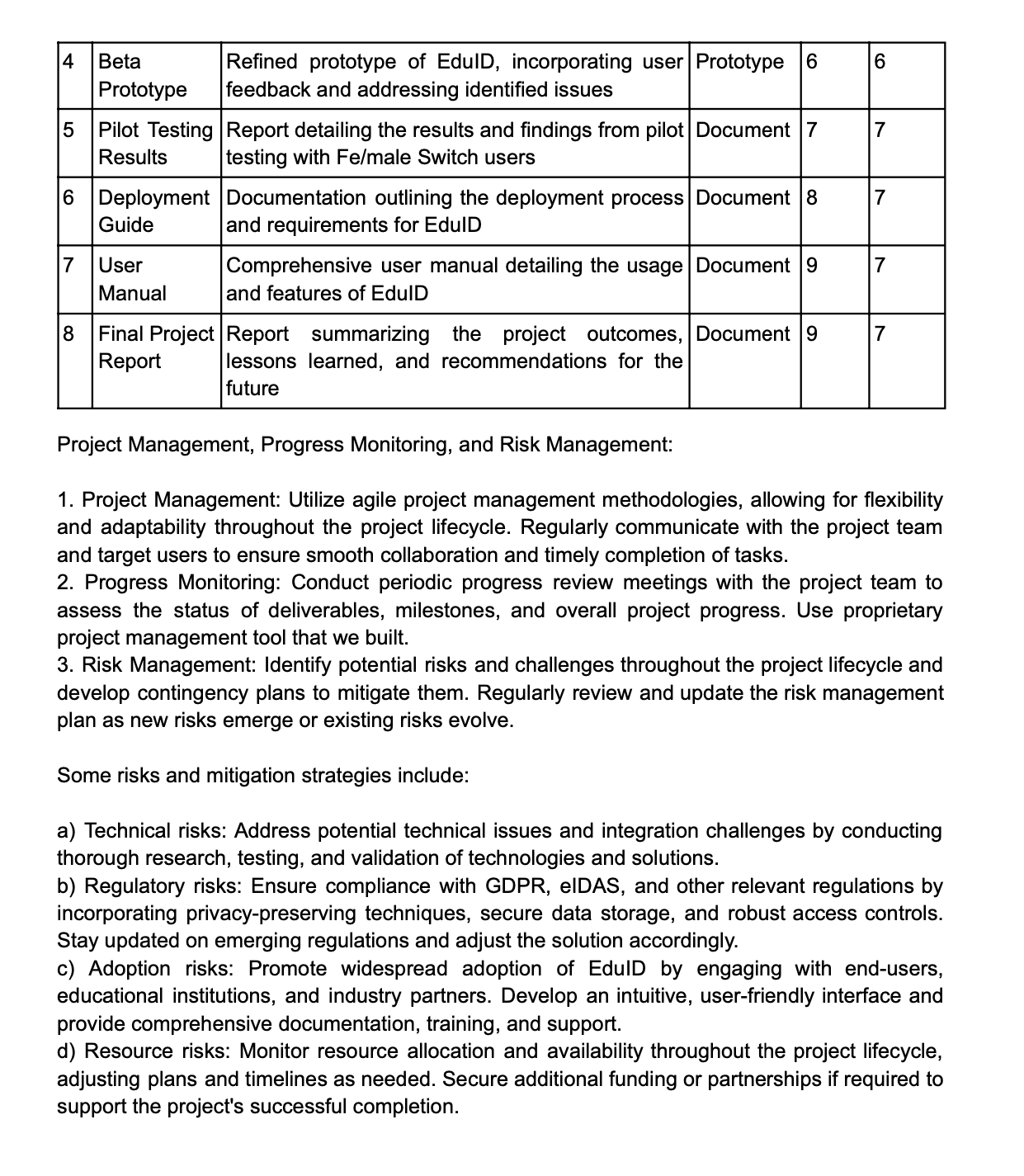

Project implementation: 4 (threshold 3)

This is what is being evaluated here:

- Quality and effectiveness of the work plan including extent to which the resources assigned to the work are in line with its objectives and deliverables

- Quality and effectiveness of the management procedures including risks and mitigation management

- Integration capacity in the XXX ecosystem

Strengths:

Weaknesses:

My thoughts:

Overall, GPT scored the highest here and that's awesome news for me because "deliverables, milestones, work packages" is what I hate the most in applications. This means that I can reliably use GPT for the "implementation" parts of applications knowing that it will do a better job than me.

- Quality and effectiveness of the work plan including extent to which the resources assigned to the work are in line with its objectives and deliverables

- Quality and effectiveness of the management procedures including risks and mitigation management

- Integration capacity in the XXX ecosystem

Strengths:

- A credible and well-articulated development plan is presented with an Agile development model in line with the user-centered design objectives of the solution.

- The activities are adequately documented and organised and, in line with the objectives, including two phases of continuous community engagement and feedback integration.

- The project management and risk management activities are adequately described. Moreover, an initial definition of risk mitigation actions is provided.

- The project organisation shows a general ability of the proposer to carry out the activities according to the outlined objectives.

Weaknesses:

- Activities related to the engagement of user groups are not adequately planned. The strategy for engaging targeted users and the partner engagement model for testing and validation activities is unclear (letter of intent, MOU, etc.). (Activities like "webinars, workshops, user testing in a pilot, etc." were mentioned, but I assume a waitlist with 1,000 users would have been better. )

- The ability to integrate with the XXX ecosystem has not been defined adequately. (I assume they mean technical details here, but I can't be sure.)

My thoughts:

Overall, GPT scored the highest here and that's awesome news for me because "deliverables, milestones, work packages" is what I hate the most in applications. This means that I can reliably use GPT for the "implementation" parts of applications knowing that it will do a better job than me.

Overall Comments

The proposal addresses sufficiently the criteria but fails to proceed to the online interview because of its ranking position, due to the competition.

My thoughts:

An online interview would have been awkward.

I am quite happy with the experiment and my conclusion is that GPT4 can and should be used in applications (to decrease the burden on the applicant) is good news for all startups.

Evaluators can and should use GPT to increase the quality and remove bias from their evaluations.

And I will proceed to turning my prototype into an MVP and continue making it better.

My thoughts:

An online interview would have been awkward.

I am quite happy with the experiment and my conclusion is that GPT4 can and should be used in applications (to decrease the burden on the applicant) is good news for all startups.

Evaluators can and should use GPT to increase the quality and remove bias from their evaluations.

And I will proceed to turning my prototype into an MVP and continue making it better.

Do you have an urge to become an entrepreneur? That's a great start! First off, check your entrepreneurial potential to know what your strengths and weaknesses are. Analyse your entrepreneurial potential here and train your startup muscles as you go.

For everything else, there's Elona, the AI startup co-founder for you!

The application

(minus the sensitive information: info about the company and team and Gantt chart)

PROJECT SUMMARY

Educational Micro-Credentials

EduID is an open-source decentralized, user-centric digital identity management system specifically designed for the online education sector, focusing on the management of micro-credentials and micro-competencies. Built on blockchain technology, the solution aims to provide a secure, privacy-preserving platform that empowers individuals to take control of their educational achievements while ensuring trustworthiness and compliance with GDPR and eIDAS regulations.

The project will leverage advanced technologies such as verifiable credentials, decentralized reputation systems, and privacy-preserving techniques to create a scalable and interoperable solution that seamlessly integrates with existing identity management frameworks and supports cross-border data exchange within the EU.

In addition to its technical capabilities, EduID is committed to user friendliness, addressing the needs of non technical people. This focus on accessibility ensures that the platform caters to a diverse user base, promoting wider adoption of decentralized digital identity solutions in the online education sector.

To validate the proof of concept and ensure a strong product-market fit, the project team will collaborate with the (partner's name) user base and other relevant educational institutions for pilot testing. This co-creation approach ensures that the solution is designed and refined based on real-world user feedback and requirements.

By the end of the 9-month development period, EduID is expected to achieve a Technology Readiness Level (TRL) of 7, demonstrating a well-tested and validated system ready for deployment in real-world educational settings. With its unique combination of privacy preservation, trustworthiness assessment, and inclusivity, EduID aims to contribute to the XXX project objectives and advance the field of human-centric decentralized digital identity management in education.

Educational Micro-Credentials

EduID is an open-source decentralized, user-centric digital identity management system specifically designed for the online education sector, focusing on the management of micro-credentials and micro-competencies. Built on blockchain technology, the solution aims to provide a secure, privacy-preserving platform that empowers individuals to take control of their educational achievements while ensuring trustworthiness and compliance with GDPR and eIDAS regulations.

The project will leverage advanced technologies such as verifiable credentials, decentralized reputation systems, and privacy-preserving techniques to create a scalable and interoperable solution that seamlessly integrates with existing identity management frameworks and supports cross-border data exchange within the EU.

In addition to its technical capabilities, EduID is committed to user friendliness, addressing the needs of non technical people. This focus on accessibility ensures that the platform caters to a diverse user base, promoting wider adoption of decentralized digital identity solutions in the online education sector.

To validate the proof of concept and ensure a strong product-market fit, the project team will collaborate with the (partner's name) user base and other relevant educational institutions for pilot testing. This co-creation approach ensures that the solution is designed and refined based on real-world user feedback and requirements.

By the end of the 9-month development period, EduID is expected to achieve a Technology Readiness Level (TRL) of 7, demonstrating a well-tested and validated system ready for deployment in real-world educational settings. With its unique combination of privacy preservation, trustworthiness assessment, and inclusivity, EduID aims to contribute to the XXX project objectives and advance the field of human-centric decentralized digital identity management in education.

DETAILED PROPOSAL DESCRIPTION

3.1 CONCEPT AND OBJECTIVES

Specific Objectives:

1. Develop a decentralized, user-centric identity management system that focuses on educational micro-credentials and micro-competencies. Integrate privacy preservation, verifiable credentials, and decentralized reputation systems to ensure trustworthiness and compliance with regulations like GDPR and eIDAS.

2. Pilot test and validate the solution with upto 1,000 users, ensuring effective evaluation in a real end-user setting. Foster widespread adoption through an engaging, accessible, and user-friendly interface that prioritizes human-centric design principles.

Needs:

A user-friendly identity management system for education that people are actually going to enjoy using.

Our proposal addresses the following challenges:

1. Decentralized identity management: EduID is built on a decentralized architecture using blockchain, giving individuals control over their own digital identities and micro-credentials.

2. Privacy preservation: We employ techniques such as zero-knowledge proofs and secure multi-party computation to protect user privacy and ensure GDPR compliance.

3. Trustworthiness assessment: Our system incorporates verifiable credentials and decentralized reputation systems to assess the trustworthiness of entities and data.

Existing Solutions:

Several existing solutions, such as SSI platforms and eIDAS-compliant systems, partly address the challenges of decentralized digital identity management. However, they often lack a specific focus on educational micro-credentials, privacy preservation, trustworthiness assessment, and most importantly user-friendliness which prevents them from mass adoption.

Human-Centric Approach:

EduID prioritizes a human-centric design approach, involving end-users and stakeholders throughout the development process. We will co-create the solution with potential users, ensuring it addresses their needs and preferences while considering security, privacy, human rights, and sustainability. Around 1,000 users will take part in various stages of the product development and testing throughout the project.

Our proposal offers a unique value proposition that anyone can relate to:

Safe and easy way to identify yourself and be in charge of your educational credentials.

The integration of EduID into the XXX Large Scale Pilot would not only demonstrate the practical application of XXX's principles and technologies in the education sector but also contribute to the broader objectives of the project by promoting adoption, collaboration, and innovation in the field of decentralized digital identity management.

3.1 CONCEPT AND OBJECTIVES

Specific Objectives:

1. Develop a decentralized, user-centric identity management system that focuses on educational micro-credentials and micro-competencies. Integrate privacy preservation, verifiable credentials, and decentralized reputation systems to ensure trustworthiness and compliance with regulations like GDPR and eIDAS.

2. Pilot test and validate the solution with upto 1,000 users, ensuring effective evaluation in a real end-user setting. Foster widespread adoption through an engaging, accessible, and user-friendly interface that prioritizes human-centric design principles.

Needs:

A user-friendly identity management system for education that people are actually going to enjoy using.

Our proposal addresses the following challenges:

1. Decentralized identity management: EduID is built on a decentralized architecture using blockchain, giving individuals control over their own digital identities and micro-credentials.

2. Privacy preservation: We employ techniques such as zero-knowledge proofs and secure multi-party computation to protect user privacy and ensure GDPR compliance.

3. Trustworthiness assessment: Our system incorporates verifiable credentials and decentralized reputation systems to assess the trustworthiness of entities and data.

Existing Solutions:

Several existing solutions, such as SSI platforms and eIDAS-compliant systems, partly address the challenges of decentralized digital identity management. However, they often lack a specific focus on educational micro-credentials, privacy preservation, trustworthiness assessment, and most importantly user-friendliness which prevents them from mass adoption.

Human-Centric Approach:

EduID prioritizes a human-centric design approach, involving end-users and stakeholders throughout the development process. We will co-create the solution with potential users, ensuring it addresses their needs and preferences while considering security, privacy, human rights, and sustainability. Around 1,000 users will take part in various stages of the product development and testing throughout the project.

Our proposal offers a unique value proposition that anyone can relate to:

Safe and easy way to identify yourself and be in charge of your educational credentials.

The integration of EduID into the XXX Large Scale Pilot would not only demonstrate the practical application of XXX's principles and technologies in the education sector but also contribute to the broader objectives of the project by promoting adoption, collaboration, and innovation in the field of decentralized digital identity management.

3.2 PROPOSAL SOLUTION

Product Description:

EduID is a decentralized, user-centric digital identity management system for online education. It integrates all the must-have features like privacy preservation, verifiable credentials, and decentralized reputation systems to ensure trustworthiness, compliance with GDPR and eIDAS regulations. It combines the power of 3D object recognition as part of the authentication process with the ease of use that stimulates mass adoption.

To address the challenge of decentralized digital identity, EduID focuses on the following key aspects:

1. User-led design: if we are building a tool for individuals to manage, control, and share their educational micro-credentials, then these target users must be part of the development process from day 1.

4. Inclusivity: we prioritize the needs of non-technical communities, ensuring equal access to education and opportunities.

Main Differentiator:

Co-developed and tested by a large number of potential target users without technical background.

Maturity and Expected TRL:

The current maturity of EduID's product/prototype is TRL 4, with a functional prototype demonstrating the core features in a controlled environment (integrated as part of the startup game pilot). By the end of the project, we expect to achieve TRL 7, with a well-tested and validated system ready for deployment in a real-world educational setting.

Validation Approach:

To validate the proof of concept, we will collaborate with the (partner's name) user base and other relevant educational institutions for pilot testing.

The validation process will include:

1. Test scenarios: Conduct tests in various educational contexts, such as issuing, sharing, and verifying micro-credentials, as well as assessing trustworthiness and reputation.

2. Deployment size: Pilot the solution with a diverse group of up to 1,000 users, collect feedback and immediately integrate it into the prototype.

3. User feedback: Gather feedback from pilot testers to evaluate the solution's usability, effectiveness, and impact on their educational experience.

4. Legal and Ethical clearance: Ensure compliance with ethical guidelines and data protection regulations throughout the testing process.

This approach will help us demonstrate the value and potential of EduID in addressing the challenges of decentralized digital identity management while meeting the XXX objectives and requirements.

Product Description:

EduID is a decentralized, user-centric digital identity management system for online education. It integrates all the must-have features like privacy preservation, verifiable credentials, and decentralized reputation systems to ensure trustworthiness, compliance with GDPR and eIDAS regulations. It combines the power of 3D object recognition as part of the authentication process with the ease of use that stimulates mass adoption.

To address the challenge of decentralized digital identity, EduID focuses on the following key aspects:

1. User-led design: if we are building a tool for individuals to manage, control, and share their educational micro-credentials, then these target users must be part of the development process from day 1.

4. Inclusivity: we prioritize the needs of non-technical communities, ensuring equal access to education and opportunities.

Main Differentiator:

Co-developed and tested by a large number of potential target users without technical background.

Maturity and Expected TRL:

The current maturity of EduID's product/prototype is TRL 4, with a functional prototype demonstrating the core features in a controlled environment (integrated as part of the startup game pilot). By the end of the project, we expect to achieve TRL 7, with a well-tested and validated system ready for deployment in a real-world educational setting.

Validation Approach:

To validate the proof of concept, we will collaborate with the (partner's name) user base and other relevant educational institutions for pilot testing.

The validation process will include:

1. Test scenarios: Conduct tests in various educational contexts, such as issuing, sharing, and verifying micro-credentials, as well as assessing trustworthiness and reputation.

2. Deployment size: Pilot the solution with a diverse group of up to 1,000 users, collect feedback and immediately integrate it into the prototype.

3. User feedback: Gather feedback from pilot testers to evaluate the solution's usability, effectiveness, and impact on their educational experience.

4. Legal and Ethical clearance: Ensure compliance with ethical guidelines and data protection regulations throughout the testing process.

This approach will help us demonstrate the value and potential of EduID in addressing the challenges of decentralized digital identity management while meeting the XXX objectives and requirements.

3.3 EXPECTED IMPACT

EduID will contribute to the XXX project objectives and the better acceptance of decentralized digital identity by:

1. Offering a secure, user-centric digital identity management system for educational micro-credentials, empowering individuals to manage and share their credentials with increased privacy and control.

2. Promoting trust-based frameworks through verifiable credentials and decentralized reputation systems, ensuring trustworthiness and regulatory compliance.

3. Focusing on inclusivity by addressing the needs of non-technical communities, encouraging wider adoption of decentralized digital identity solutions.

EduID adds value to the XXX project by:

1. Providing a use case specifically focused on education, showcasing the potential of decentralized digital identity in a growing sector of online education.

2. Demonstrating the effectiveness of combining privacy preservation, trustworthiness assessment, and inclusivity in a decentralized identity management solution.

3. Contributing to the development of best practices, standards, and guidelines for decentralized digital identity management that can be mass adopted.

4. Facilitating collaboration with relevant stakeholders, including a growing number of potential target users, majority of them being women, which is a huge plus for the whole blockchain industry.

EduID's impact on the European and global industry includes:

1. Enhancing the competitiveness of the European educational sector by providing a user-friendly decentralized identity management solution co-created and tested by up to 1,000 female users.

2. Fostering innovation in the field of digital identity management and encouraging the development of new solutions and applications.

3. Promoting European expertise in privacy-preserving technologies and trust-based frameworks, positioning Europe as a leader in decentralized digital identity management.

EduID will enhance our own business and competitiveness by:

1. Establishing a strong market presence in the niche of educational micro-credentials and decentralized identity management.

2. Expanding our product portfolio, by integrating EduID into the solution that we are building in the domain of trade secrets and digital assets.

3. Building strategic partnerships with educational institutions, industry partners, and end-users, driving adoption and collaboration.

4. Leveraging the support and resources provided by the XXX project to accelerate product development and market entry.

EduID's socio-economic and environmental impact includes:

1. Empowering women to take control of their educational credentials, enhancing employability and lifelong learning opportunities.

2. Promoting equal access to education and opportunities for marginalized communities, contributing to social inclusion and economic growth.

3. Encouraging the adoption of decentralized digital identity solutions, reducing the reliance on centralized systems and minimizing the risk of data breaches.

4. Utilizing energy-efficient technologies and practices, minimizing the environmental impact of the proposed solution.

To maximize the impact of EduID, our dissemination and communication plan includes:

1. Developing a project website, showcasing the solution, its benefits, progress updates and a wait-list.

2. Engaging with relevant stakeholders, including educational institutions, industry partners, policymakers, and end-users, through workshops, webinars, and conferences.

3. Publishing articles, blog posts, and whitepapers, sharing insights and findings from the project.

4. Utilizing social media and public relations activities to raise awareness about EduID and the benefits of decentralized digital identity management.

5. Including information about EduID into our newsletter.

Our Data Management Plan comprises the following aspects:

1. Data identification: Identify and categorize the types of data generated or collected during the project, including user information, micro-credentials, and feedback.

2. Data storage and security: Implement secure storage solutions and encryption mechanisms to protect user data and ensure compliance with GDPR and other relevant regulations.

3. Data access and sharing: Define clear policies and procedures for accessing and sharing data within the project team and with external stakeholders, ensuring proper authorization and consent.

4. Data retention and disposal: Establish guidelines for data retention and disposal, ensuring that data is securely deleted when no longer needed or required by law.

5. Data ethics and privacy: Ensure compliance with ethical guidelines and privacy regulations throughout the project, including obtaining informed consent from users and conducting regular audits.

EduID will contribute to the XXX project objectives and the better acceptance of decentralized digital identity by:

1. Offering a secure, user-centric digital identity management system for educational micro-credentials, empowering individuals to manage and share their credentials with increased privacy and control.

2. Promoting trust-based frameworks through verifiable credentials and decentralized reputation systems, ensuring trustworthiness and regulatory compliance.

3. Focusing on inclusivity by addressing the needs of non-technical communities, encouraging wider adoption of decentralized digital identity solutions.

EduID adds value to the XXX project by:

1. Providing a use case specifically focused on education, showcasing the potential of decentralized digital identity in a growing sector of online education.

2. Demonstrating the effectiveness of combining privacy preservation, trustworthiness assessment, and inclusivity in a decentralized identity management solution.

3. Contributing to the development of best practices, standards, and guidelines for decentralized digital identity management that can be mass adopted.

4. Facilitating collaboration with relevant stakeholders, including a growing number of potential target users, majority of them being women, which is a huge plus for the whole blockchain industry.

EduID's impact on the European and global industry includes:

1. Enhancing the competitiveness of the European educational sector by providing a user-friendly decentralized identity management solution co-created and tested by up to 1,000 female users.

2. Fostering innovation in the field of digital identity management and encouraging the development of new solutions and applications.

3. Promoting European expertise in privacy-preserving technologies and trust-based frameworks, positioning Europe as a leader in decentralized digital identity management.

EduID will enhance our own business and competitiveness by:

1. Establishing a strong market presence in the niche of educational micro-credentials and decentralized identity management.

2. Expanding our product portfolio, by integrating EduID into the solution that we are building in the domain of trade secrets and digital assets.

3. Building strategic partnerships with educational institutions, industry partners, and end-users, driving adoption and collaboration.

4. Leveraging the support and resources provided by the XXX project to accelerate product development and market entry.

EduID's socio-economic and environmental impact includes:

1. Empowering women to take control of their educational credentials, enhancing employability and lifelong learning opportunities.

2. Promoting equal access to education and opportunities for marginalized communities, contributing to social inclusion and economic growth.

3. Encouraging the adoption of decentralized digital identity solutions, reducing the reliance on centralized systems and minimizing the risk of data breaches.

4. Utilizing energy-efficient technologies and practices, minimizing the environmental impact of the proposed solution.

To maximize the impact of EduID, our dissemination and communication plan includes:

1. Developing a project website, showcasing the solution, its benefits, progress updates and a wait-list.

2. Engaging with relevant stakeholders, including educational institutions, industry partners, policymakers, and end-users, through workshops, webinars, and conferences.

3. Publishing articles, blog posts, and whitepapers, sharing insights and findings from the project.

4. Utilizing social media and public relations activities to raise awareness about EduID and the benefits of decentralized digital identity management.

5. Including information about EduID into our newsletter.

Our Data Management Plan comprises the following aspects:

1. Data identification: Identify and categorize the types of data generated or collected during the project, including user information, micro-credentials, and feedback.

2. Data storage and security: Implement secure storage solutions and encryption mechanisms to protect user data and ensure compliance with GDPR and other relevant regulations.

3. Data access and sharing: Define clear policies and procedures for accessing and sharing data within the project team and with external stakeholders, ensuring proper authorization and consent.

4. Data retention and disposal: Establish guidelines for data retention and disposal, ensuring that data is securely deleted when no longer needed or required by law.

5. Data ethics and privacy: Ensure compliance with ethical guidelines and privacy regulations throughout the project, including obtaining informed consent from users and conducting regular audits.

3.4 BUSINESS MODEL AND SUSTAINABILITY

Business Potential:

The business potential of EduID lies in the growing demand for user-friendly secure digital identity management solutions in the online education sector. With an increasing focus on lifelong learning, micro-credentials, and personalized learning paths, the need for a decentralized identity management system tailored for education is evident. EduID addresses this market gap, targeting educational institutions, learners, and employers, catering to a wide customer base. EduID is well positioned for the $ 5.5 trillion education market. E-Learning market size is projected to reach $840.11 billion by 2030, with Europe E-Learning Market reaching $130bn by 2026, says Graphical Research. According to Eurostat, at least 27% of the European population has taken an online course in 2021, which amounts to around 200 million people. Even if we have a market share of 1%, it’s at least 2 million people annually that need to be certified via numerous institutions.

EduID's business model consists of the following revenue streams:

1. Subscription fees (B2C): Offer tiered subscription plans for educational institutions, providing access to the platform's features and services.

2. Licensing fees (B2B): License the use of EduID's technology to third-party platforms, enabling them to integrate our decentralized identity management solution into their existing systems.

3. Customization and consulting services: Provide customization and consulting services to institutions and organizations looking to tailor EduID to their specific requirements or integrate it into their infrastructure.

Next Steps Towards Economic Sustainability and Large-Scale Deployment:

1. Refine and enhance the EduID solution based on pilot testing and user feedback, ensuring a strong product-market fit. This includes support of different devices and languages.

2. Establish partnerships with educational institutions, governmental organizations, and private sector entities to promote widespread adoption and collaboration.

3. Secure additional funding through grants, investments, or strategic partnerships to support the market growth of EduID. At least 500,000 eur of follow up investment is to be sought after.

4. With development costs being roughly equal to marketing costs and decreasing in Year 2 and 3, we expect to break even in Year 3 and finish the year with 100,000 eur in Revenue based on the realistic scenario.

EduID is committed to environmental sustainability and will comply by:

1. Utilizing energy-efficient blockchain technologies, minimizing the energy consumption and carbon footprint of the platform.

2. Continuously monitoring and evaluating the energy efficiency of our solution, adapting to advancements in technology and best practices to further minimize environmental impact.

3. Raising awareness about the importance of environmental sustainability among our users, partners, and stakeholders, fostering a culture of responsibility and commitment to protecting the environment.

Business Potential:

The business potential of EduID lies in the growing demand for user-friendly secure digital identity management solutions in the online education sector. With an increasing focus on lifelong learning, micro-credentials, and personalized learning paths, the need for a decentralized identity management system tailored for education is evident. EduID addresses this market gap, targeting educational institutions, learners, and employers, catering to a wide customer base. EduID is well positioned for the $ 5.5 trillion education market. E-Learning market size is projected to reach $840.11 billion by 2030, with Europe E-Learning Market reaching $130bn by 2026, says Graphical Research. According to Eurostat, at least 27% of the European population has taken an online course in 2021, which amounts to around 200 million people. Even if we have a market share of 1%, it’s at least 2 million people annually that need to be certified via numerous institutions.

EduID's business model consists of the following revenue streams:

1. Subscription fees (B2C): Offer tiered subscription plans for educational institutions, providing access to the platform's features and services.

2. Licensing fees (B2B): License the use of EduID's technology to third-party platforms, enabling them to integrate our decentralized identity management solution into their existing systems.

3. Customization and consulting services: Provide customization and consulting services to institutions and organizations looking to tailor EduID to their specific requirements or integrate it into their infrastructure.

Next Steps Towards Economic Sustainability and Large-Scale Deployment:

1. Refine and enhance the EduID solution based on pilot testing and user feedback, ensuring a strong product-market fit. This includes support of different devices and languages.

2. Establish partnerships with educational institutions, governmental organizations, and private sector entities to promote widespread adoption and collaboration.

3. Secure additional funding through grants, investments, or strategic partnerships to support the market growth of EduID. At least 500,000 eur of follow up investment is to be sought after.

4. With development costs being roughly equal to marketing costs and decreasing in Year 2 and 3, we expect to break even in Year 3 and finish the year with 100,000 eur in Revenue based on the realistic scenario.

EduID is committed to environmental sustainability and will comply by:

1. Utilizing energy-efficient blockchain technologies, minimizing the energy consumption and carbon footprint of the platform.

2. Continuously monitoring and evaluating the energy efficiency of our solution, adapting to advancements in technology and best practices to further minimize environmental impact.

3. Raising awareness about the importance of environmental sustainability among our users, partners, and stakeholders, fostering a culture of responsibility and commitment to protecting the environment.

If you want to get your hands on the MVP of the "Funding applications assistant", get on the waitlist here.